1st Workshop on Maritime Computer Vision (MaCVi)

USV Obstacle Segmentation

TLDR

Download the MODS dataset on the dataset page. Train your model on external data (e.g. using MaSTr1325). Upload a zip file with results and predictions on the upload page.

Overview

The MODS Benchmark is hosted here as part of the upcoming MaCVi '23 Workshop. The goal of MODS is to benchmark segmentation-based and detection-based obstacle detection methods for the maritime domain, specifically for use in unmanned surface vehicles (USVs). It contains 94 maritime sequences captured using a radio-controlled USV. Instead of using general segmentation metrics, MODS scores models using USV-oriented metrics, that focus on the obstacle detection capabilities of methods.

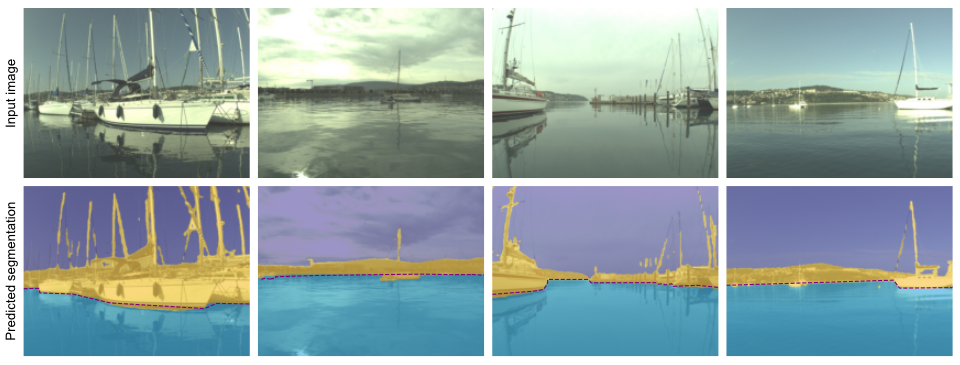

For the obstacles segmentation track, your task is to develop a semantic segmentation method that classifies the pixels in a given image into one of three classes: sky, water or obstacle. The MaSTr1325 dataset was created specifically for this purpose and we suggest using it to train you models. For an example of training on MaSTr1325 and prediction on the MODS dataset see WaSR.

Task

Create a semantic segmentation method that classifies the pixels in a given image into one of three classes: sky, water or obstacle. An obstacle is everything that the USV can crash into or that it should avoid (e.g. boats, swimmers, land, buoys).

Dataset

MODS consists of 94 maritime sequences totalling approximately 8000 annotated frames with over 60k annotated objects. It consists of annotations for two different types of obstacles: i) dynamic obstacles, which are objects floating in the water (e.g. boats, buoys, swimmers) and ii) static obstacles, which are all remaining obstacle regions (e.g. shoreline, piers). Dynamic obstacles are annotated using bounding boxes. For static obstacles, MODS labels the boundary between static obstacles and water annotated as polylines.

Evaluation metrics

MODS evaluation protocol is designed to score the predictions in a way meaningful for practical USV navigation. Methods are evaluated in terms of:

- Water-edge segmentation (static obstacles) is evaluated by the prediction quality of the boundary between water and static obstacles in terms of accuracy (the average distance between predicted boundary and actual boundary - μA) and robustness (the percentage of the water-edge that is correctly detected, i.e. where the distance between predicted and actual boundary is within a set threshold - μR)

- Dynamic obstacle detection is evaluated in terms of a number of true positive (TP), false positive (FP) and false negative (FN) detections and summarized by precision (Pr), recall (Re) and F1-score (F1). For segmentation methods an obstacle is considered detected (TP) if the predicted segmentation of the obstacle class inside the obstacle bounding box is sufficient (larger than a set percentage). Predicted segmentation blobs outside obstacle bounding boxes are considered as FP detections.

From the perspective of USV navigation, prediction errors closer to the boat are more dangerous than errors farther away. To account for this, MODS also separately evaluates the dynamic obstacle detection performance (PrD, ReD, F1D) within a 15m large radial area in front the boat (i.e. danger zone).

To determine the winner of the challenge, the average of F1 and F1D scores will be used as an overall measure of quality of the method. In case of a tie μA will be considered.

Furthermore, we require every participant to submit information on the speed of their method measured in frames per second (FPS). Please also indicate the hardware that you used. Lastly, you should indicate which data sets (also for pretraining) you used during training and whether you used meta data.

Participate

To participate in the challenge you can perform the following steps:

- Train a semantic segmentation model on external data (don't use MODS for training!). We suggest using the MaSTr1325 dataset for training your models. You can also use additional publicly available training data.

- Note: the WaSR network may be a good starting point for the development of you model. The repository contains the scripts for training on MaSTr1325 and prediction on MODS.

- Download the MODS dataset and the MODS evaluator. Configure the evaluator (set paths to your data) by following the steps in the MODS evaluation repository.

- Use your method to generate segmentation predictions on the MODS sequences (e.g. prediction using WaSR).

- Also note the performance of your method (in FPS) and the hardware used.

- Run MODS evaluation:

python modb_evaluation.py --config-file configs/mods.yaml <method_name>- The evaluator will show the evaluation results and create two files in the configured results directory:

results_<method_name>.jsonandresults_<method_name>_overlap.json.

- The evaluator will show the evaluation results and create two files in the configured results directory:

- Create a ZIP archive with your submission. You should include the following files/directories:

results.json: this is theresults_<method_name>.jsonfile from the results directory. Note that you have to rename the file.predictions: directory containing the predicted segmentation masks of your method on MODScode: directory containing the code of your method. Include all the code files your method requires to run- The structure of the submitted ZIP file (example here, UPDATED) should look something like this:

results.json code ├── net.py └── ... predictions ├── kope100-00006790-00007090 │ ├── 00006790L.png │ └── 00006800L.png │ └── ... ├── ... ├── stru02-00118250-00118800 │ ├── 00118250L.png │ ├── 00118260L.png

- Upload your ZIP file along with all the required information in the form here. You need to register first. The uploading option will appear there soon.

- If you provided a valid file and sufficient information, you should see your method on the leaderboards soon. You may upload at most three times per day (independent of the challenge track).

Terms and Conditions

- Submissions must be made before the deadline as listed on the dates page

- You may submit at most 3 times per day independent of the challenge

- The winner is determined by the Average metric (μA in case of tie)

- You are allowed to use any publicly available data for training but you must list them at the time of upload. This also applies to pre-training.

- Note that we (as organizers) may upload models for this challenge BUT we do not compete for a winning position (i.e. our models do not count on the leaderboard and merely serve as references). Thus, if your method is worse (in any metric) than one of the organizer's, you are still encouraged to submit your method as you might win.