1st Workshop on Maritime Computer Vision (MaCVi)

MODS - Obstacle Detection

TLDR

Download the MODS dataset on the dataset page. Train your model on any external data (for your convenience we provide MODD2 dataset and older MODD dataset). Upload a zip file with results and predictions on the upload page.

Overview

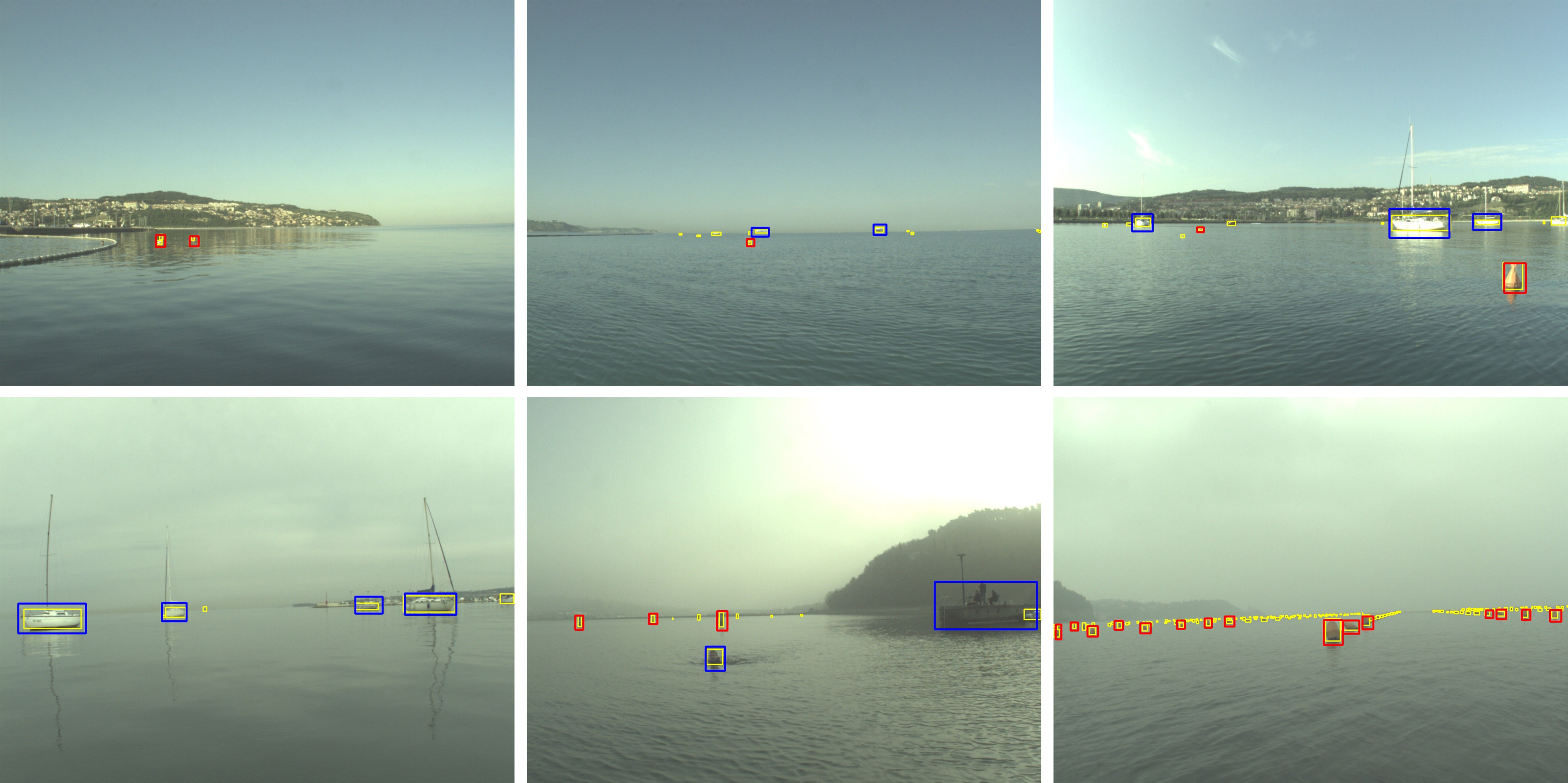

The MODS Obstacle Detection Benchmark is hosted here as part of the upcoming WACV '23 Workshop. The goal of MODS is to benchmark segmentation-based and detection-based obstacle detection methods for the maritime domain, specifically for use in unmanned surface vehicles (USVs). It contains 94 maritime sequences captured using a radio-controlled USV. Instead of using plain boilerplate evaluation code, MODS scores models using USV-oriented metrics.

For the obstacle detection task, your task is to develop a object detection method that detects the obstacles that are found on the water surface.

Please see the corresponding Github for instructions on how to set up your submission file.Task

Create object detection method that can detect the two specific classes of obstacles on the water surface: vessels and persons. Other obstacles should be detected as well, but they should be classified as belonging to others category. An obstacle is everything that the USV can crash into or that it should avoid (e.g. boats, swimmers, land, buoys).

Dataset

MODS consists of 94 maritime sequences totalling approximately 8000 annotated frames with over 60k annotated objects. It consists of annotations for two different types of obstacles: i) dynamic obstacles, which are objects floating in the water (e.g. boats, buoys, swimmers) and ii) static obstacles, which are all remaining obstacle regions (e.g. shoreline, piers). Dynamic obstacles are annotated using bounding boxes. For static obstacles, MODS labels the boundary between static obstacles and water annotated as polylines. Only dynamic obstacles are relevant to the object detection challenge!

Evaluation metrics for object detection challenge

The algorithm should output detections of all waterborne objects of the above mentioned semantic classes: vessel, person and others with rectangular axis-aligned bounding boxes.

MODS evaluation protocol is designed to score the predictions in a way meaningful for practical USV navigation. Detection methods are evaluated on standard IoU metric for bounding box detections. A detection counts as true positive (TP) if it has at least a 0.3 IoU score with the ground truth, otherwise it is counted as a false positive (FP). The final score is composed of three different metrics:

- Average F1 score when taking into account the class of the ground truth and the prediction.

- Average F1 score where the class information is ignored.

- Average F1 score of objects within a 15m large radial area in front of the boat (i.e. danger zone). The ground truth and the detection bounding boxes are considered as within the danger zone if at least 50% of the area lies within the danger zone.

To determine the winner of the challenge, the average of the above three F1 metrics scores will be used as an overall measure of quality of the method. In case of a tie, the first of the F1 metrics specified above will be used to select the winner.

Furthermore, we require every participant to submit information on the speed of their method measured in frames per second (FPS). Please also indicate the hardware that you used. Lastly, you should indicate which data sets (also for pretraining) you used during training and whether you used meta data.

Participate

To participate in the challenge you can perform the following steps:

- Train a semantic segmentation model on external data (don't use MODS for training!). We suggest using the MODD2 dataset and older MODD dataset) for training your models. You can also use additional publicly available training data.

- Use the evaluation tool we provide to analyze your results and upload them to the server.

Terms and Conditions

- Submissions must be made before the deadline as listed on the participate page

- You may submit at most 3 times per day independent of the challenge

- You are allowed to use any publicly available data for training but you must list them at the time of upload. This also applies to pretraining.

- Note that we (as organizers) may upload models for this challenge BUT we do not compete for a winning position (i.e. our models do not count on the leaderboard and merely serve as references). Thus, if your method is worse (in any metric) than one of the organizer's, you are still encouraged to submit your method as you might win.